-

[머신러닝] 회귀(Regression)Studies/Data Analytics&ML 2023. 1. 23. 14:32

01 데이터셋 불러오기

# 기본 라이브러리 import pandas as pd import numpy as np import matplotlib.pyplot as plt import seaborn as sns # skleran 데이터셋에서 보스턴 주택 데이터셋 로딩 from sklearn import datasets housing = datasets.load_boston() # 딕셔너리 형태이므로, key 값을 확인 housing.keys() # 판다스 데이터프레임으로 변환 data = pd.DataFrame(housing['data'], columns=housing['feature_names']) target = pd.DataFrame(housing['target'], columns=['Target']) # 데이터셋 크기 print(data.shape) print(target.shape) print(data.head()) print(housing['feature_names']) print(target.head()) # 데이터프레임 결합하기 df = pd.concat([data, target], axis=1) df.head(2)

housing.keys()

print(housing['feature_names']) / print(target.head())

df = pd.concat([data, target], axis=1) 02 데이터 탐색 (EDA)

# 상관계수 행렬 df_corr = df.corr() # 히트맵 그리기 plt.figure(figsize=(10, 10)) sns.set(font_scale=0.8) sns.heatmap(df_corr, annot=True, cbar=False); plt.show() # 변수 간의 상관관계 분석 - Target 변수와 상관관계가 높은 순서대로 정리 corr_order = df_corr.loc[:'LSTAT', 'Target'].abs().sort_values(ascending=False) corr_order # Target 변수와 상관관계가 높은 4개 변수를 추출 plot_cols = ['Target', 'LSTAT', 'RM', 'PTRATIO', 'INDUS'] plot_df = df.loc[:, plot_cols] plot_df.head() # regplot으로 선형회귀선 표시 plt.figure(figsize=(10,10)) print(plot_cols[1:]) for idx, col in enumerate(plot_cols[1:]): print('idx=',idx,'col=',col) ax1 = plt.subplot(2, 2, idx+1) #subplot은 축 공유 sns.regplot(x=col, y=plot_cols[0], data=plot_df, ax=ax1) #regplot은 scatter와 line을 함께 볼 수 있는 데이터 시각화 방법 plt.show()

regplot

# Target 데이터의 분포 sns.displot( x='Target', kind='hist', data=df) plt.show()

Target의 데이터 분포 03 데이터 전처리

피처 스케일링

# 사이킷런 MinMaxScaler 적용 from sklearn.preprocessing import MinMaxScaler scaler=MinMaxScaler() df_scaled = df.iloc[:, :-1] scaler.fit(df_scaled) df_scaled = scaler.transform(df_scaled) # 스케일링 변환된 값을 데이터프레임에 반영 df.iloc[:, :-1] = df_scaled[:, :] df.head()마지막 열인 'Targert' 만 제외하고 값을 0-1로 스케일링하는 MinMaxScaler 적용

df.head()

학습용-테스트 데이터셋 분리하기

# 학습 - 테스트 데이터셋 분할 from sklearn.model_selection import train_test_split X_data = df.loc[:, ['LSTAT', 'RM']] y_data = df.loc[:, 'Target'] X_train, X_test, y_train, y_test = train_test_split(X_data, y_data, test_size=0.2, shuffle=True, random_state=12) print(X_train.shape, y_train.shape) print(X_test.shape, y_test.shape)04 Baseline 모델 - 선형 회귀

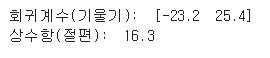

# 선형 회귀 모형 from sklearn.linear_model import LinearRegression lr = LinearRegression() lr.fit(X_train, y_train) print ("회귀계수(기울기): ", np.round(lr.coef_, 1)) print ("상수항(절편): ", np.round(lr.intercept_, 1)) # 예측 y_test_pred = lr.predict(X_test) # 예측값, 실제값의 분포 plt.figure(figsize=(10, 5)) plt.scatter(X_test['LSTAT'], y_test, label='y_test') plt.scatter(X_test['LSTAT'], y_test_pred, c='r', label='y_pred') plt.legend(loc='best') plt.show() # 평가 from sklearn.metrics import mean_squared_error y_train_pred = lr.predict(X_train) train_mse = mean_squared_error(y_train, y_train_pred) print("Train MSE: %.4f" % train_mse) test_mse = mean_squared_error(y_test, y_test_pred) print("Test MSE: %.4f" % test_mse)

lr.coef_, lr.intercept_

예측값 , 실제값의 분포

평가 05 교차 검증

# cross_val_score 함수 from sklearn.model_selection import cross_val_score lr = LinearRegression() mse_scores = -1*cross_val_score(lr, X_train, y_train, cv=5, scoring='neg_mean_squared_error') print("개별 Fold의 MSE: ", np.round(mse_scores, 4)) print("평균 MSE: %.4f" % np.mean(mse_scores))

06 L1/L2 규제

# 2차 다항식 변환 from sklearn.preprocessing import PolynomialFeatures pf = PolynomialFeatures(degree=2) X_train_poly = pf.fit_transform(X_train) print("원본 학습 데이터셋: ", X_train.shape) print("2차 다항식 변환 데이터셋: ", X_train_poly.shape)# 15차 다항식 변환 데이터셋으로 선형 회귀 모형 학습 pf = PolynomialFeatures(degree=15) X_train_poly = pf.fit_transform(X_train) lr = LinearRegression() lr.fit(X_train_poly, y_train) # 테스트 데이터에 대한 예측 및 평가 y_train_pred = lr.predict(X_train_poly) train_mse = mean_squared_error(y_train, y_train_pred) print("Train MSE: %.4f" % train_mse) X_test_poly = pf.fit_transform(X_test) y_test_pred = lr.predict(X_test_poly) test_mse = mean_squared_error(y_test, y_test_pred) print("Test MSE: %.4f" % test_mse)# 15차 다항식 변환 데이터셋으로 선형 회귀 모형 학습 pf = PolynomialFeatures(degree=15) X_train_poly = pf.fit_transform(X_train) lr = LinearRegression() lr.fit(X_train_poly, y_train) # 테스트 데이터에 대한 예측 및 평가 y_train_pred = lr.predict(X_train_poly) train_mse = mean_squared_error(y_train, y_train_pred) print("Train MSE: %.4f" % train_mse) X_test_poly = pf.fit_transform(X_test) y_test_pred = lr.predict(X_test_poly) test_mse = mean_squared_error(y_test, y_test_pred) print("Test MSE: %.4f" % test_mse)

# 다항식 차수에 따른 모델 적합도 변화 plt.figure(figsize=(15,5)) for n, deg in enumerate([1, 2, 15]): ax1 = plt.subplot(1, 3, n+1) # degree별 다항 회귀 모형 적용 pf = PolynomialFeatures(degree=deg) X_train_poly = pf.fit_transform(X_train.loc[:, ['LSTAT']]) X_test_poly = pf.fit_transform(X_test.loc[:, ['LSTAT']]) lr = LinearRegression() lr.fit(X_train_poly, y_train) y_test_pred = lr.predict(X_test_poly) # 실제값 분포 plt.scatter(X_test.loc[:, ['LSTAT']], y_test, label='Targets') # 예측값 분포 plt.scatter(X_test.loc[:, ['LSTAT']], y_test_pred, label='Predictions') # 제목 표시 plt.title("Degree %d" % deg) # 범례 표시 plt.legend() plt.show()

다항식 차수에 따른 모델 적합도 변화 선형 회귀 모델

# Ridge (L2 규제) from sklearn.linear_model import Ridge rdg = Ridge(alpha=2.5) rdg.fit(X_train_poly, y_train) y_train_pred = rdg.predict(X_train_poly) train_mse = mean_squared_error(y_train, y_train_pred) print("Train MSE: %.4f" % train_mse) y_test_pred = rdg.predict(X_test_poly) test_mse = mean_squared_error(y_test, y_test_pred) print("Test MSE: %.4f" % test_mse) # Lasso (L1 규제) from sklearn.linear_model import Lasso las = Lasso(alpha=0.05) las.fit(X_train_poly, y_train) y_train_pred = las.predict(X_train_poly) train_mse = mean_squared_error(y_train, y_train_pred) print("Train MSE: %.4f" % train_mse) y_test_pred = las.predict(X_test_poly) test_mse = mean_squared_error(y_test, y_test_pred) print("Test MSE: %.4f" % test_mse) # ElasticNet (L2/L1 규제) from sklearn.linear_model import ElasticNet ela = ElasticNet(alpha=0.01, l1_ratio=0.7) ela.fit(X_train_poly, y_train) y_train_pred = ela.predict(X_train_poly) train_mse = mean_squared_error(y_train, y_train_pred) print("Train MSE: %.4f" % train_mse) y_test_pred = ela.predict(X_test_poly) test_mse = mean_squared_error(y_test, y_test_pred) print("Test MSE: %.4f" % test_mse)07 트리 기반 모델 - 비선형 회귀

# 의사결정 나무 from sklearn.tree import DecisionTreeRegressor dtr = DecisionTreeRegressor(max_depth=3, random_state=12) dtr.fit(X_train, y_train) y_train_pred = dtr.predict(X_train) train_mse = mean_squared_error(y_train, y_train_pred) print("Train MSE: %.4f" % train_mse) y_test_pred = dtr.predict(X_test) test_mse = mean_squared_error(y_test, y_test_pred) print("Test MSE: %.4f" % test_mse) # 랜덤 포레스트 from sklearn.ensemble import RandomForestRegressor rfr = RandomForestRegressor(max_depth=3, random_state=12) rfr.fit(X_train, y_train) y_train_pred = rfr.predict(X_train) train_mse = mean_squared_error(y_train, y_train_pred) print("Train MSE: %.4f" % train_mse) y_test_pred = rfr.predict(X_test) test_mse = mean_squared_error(y_test, y_test_pred) print("Test MSE: %.4f" % test_mse) # XGBoost from xgboost import XGBRegressor xgbr = XGBRegressor(objective='reg:squarederror', max_depth=3, random_state=12) xgbr.fit(X_train, y_train) y_train_pred = xgbr.predict(X_train) train_mse = mean_squared_error(y_train, y_train_pred) print("Train MSE: %.4f" % train_mse) y_test_pred = xgbr.predict(X_test) test_mse = mean_squared_error(y_test, y_test_pred) print("Test MSE: %.4f" % test_mse)'Studies > Data Analytics&ML' 카테고리의 다른 글

[딥러닝의 이해] 학습이란? + Loss Function (0) 2023.09.17 데이터 살펴보기 (판다스/데이터프레임) (0) 2022.09.25